I have used the Oracle Containers for J2EE to demonstrate the same.

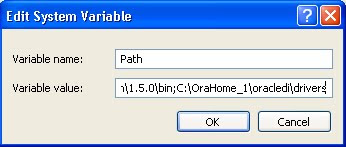

After installation one has to set the environment variables: JAVA_HOME and ORACLE_HOME.

JAVA_HOME="path of the latest JDK installed on the machine"

ORACLE_HOME="Path of the extracted J2EE Containers"

Open the command prompt, go to ORACLE_HOME/bin and start the application server using the command oc4j -start.

Open any web browser and enter the URL http://hostname:8888/

8888 is the default port for the OC4J containers

Click on Launch Application Server Control

Login with oc4jadmin as user and the password set during installation of the application server.

Select the Deploy button

Browse to the location of oracledimn.war present in the ODI_INSTALLDIR/setup/manual

Select Next

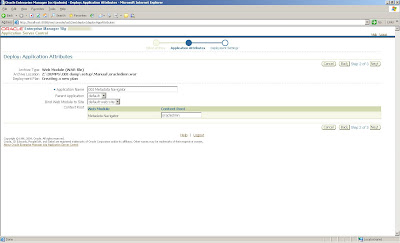

Specify the application name as ODI Metadata Navigator

The application is deployed

Browse to ODI_HOME\oracledi\bin

Copy snps_login_work.xml

Place it in OC4J_HOME\j2ee\home\applications\APP_NAME\oracledimn\WEB-INF

Open URL: http://hostname:8888/oracledimn

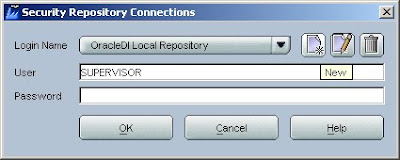

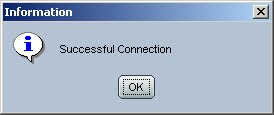

Enter the username and password specified in the settings for accessing Designer

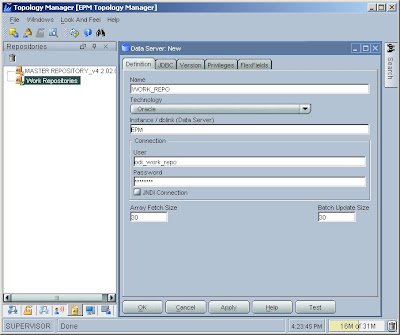

Bravo! Metadata Navigator is working with the projects listed. I had created just one project called Demo which is visible

You are now ready to browse through the different Scenarios, KM's used, Topology settings etc.

ODI Metadata navigator is a neat tool for execution of scenarios. Other then that it acts like a central web based tool to look at the different Topologies, Knowledge modules used, variables etc. It is not at all used for any kind of development. It took me a while before I could setup Metadata Navigator, hope it saves you some precious time!